The truth about those "Russian-linked Twitter accounts"

Instead of exposing disinformation, Matt Taibbi gave a master class in manufacturing it.

Matt Taibbi on Friday continued what has become a weekly tradition for him of rifling through leaked emails from Twitter executives to find messages that he can cherry-pick and use to manufacture “evidence” supporting a predetermined narrative. To date, his primary interests appear to be promoting the idea that conservative Twitter accounts were unfairly censored — a claim that has been investigated and disproven in multiple studies — and discrediting the Russia investigation and the notion of Russian interference more generally.

This week’s focus was the Hamilton 68 Dashboard, a tool developed by the German Marshall Fund of the United States (GMFUS) and the Alliance for Securing Democracy (ASD) in August 2017 for the purpose of providing “a window into Russian propaganda and disinformation efforts online,” according to the website. The dashboard tracked keywords, hashtags, and URL’s shared by 600 accounts linked to Russian influence activities, and provided updated summaries of the activity a few times a day. It was widely cited in media reports and, when used correctly, was a helpful tool to point researchers (like myself) in the direction of possible Russian influence or disinformation campaigns. It wasn’t meant to be a diagnostic marker of Russian cyber operations, and it worked best when it was used as a starting point for deeper investigation.

But Taibbi’s 43-tweet-long thread about Hamilton 68 — which referred to the dashboard as a “scam,” a “lie,” and a form of “digital McCarthysim and fraud,” among other things — wasn't concerned with how the tool was designed to be used, nor how it helped researchers when it was used appropriately. Instead, Taibbi’s entire thread attempted to discredit Hamilton 68 by focusing on how the tool was misused and offering up a series of strawman arguments that misrepresented what the dashboard claimed to do.

About those “Russian bots”

First, let’s focus on the claims about bots. Taibbi repeatedly implied that Hamilton 68 tracked “Russian bots,” which the tool never claimed to do, at least not exclusively or even primarily. In fact, the website for the dashboard even included an explicit notice that the accounts it tracked were primarily operated by humans, with some bots and cyborgs (semi-automated accounts) mixed in. It’s not a mark against the dashboard that it didn’t track bots — it’s a mark against Taibbi for either purposefully misrepresenting its purpose, or not understanding it in the first place. My guess is that the truth is somewhere in the middle. I think he’s smart enough to know the difference, but I also think he’s smart enough to know that the “Russian bots” narrative — which at this point is basically a meme — would resonate with his target audience. Either way, Taibbi blamed the dashboard for faulty reporting on “Russian bots,” despite the fact that it was not designed nor advertised as a bot detection system.

So what did Hamilton 68 track? Rather than focusing on individual account activity or bot detection, the tool utilized a network approach to analyze aggregate activity of a network of accounts that regularly engaged with content “generated by attributable Russian media and influence operations.” The purpose wasn’t to see what individual accounts were tweeting, but rather to take the temperature of networks in which known pro-Russian propaganda and disinformation circulated on a regular basis. In other words, if you frequently engaged with content produced by RT, Sputnik, TASS, or any number of Russian proxy sites like SouthFront, your account could have been flagged as exhibiting behavioral indicators of Russian influence activity.

(Mis)understanding influence operations

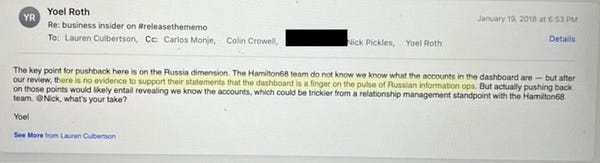

Taibbi’s thread also cited the opinions of Twitter employees who reportedly did not agree that Hamilton 68 was a reliable tool for tracking Russian propaganda. In one tweet, Taibbi displayed screenshots showing Twitter’s former head of Trust and Safety, Yoel Roth, lamenting the fact that the accounts tracked by the dashboard were “neither strongly Russian nor strongly bots,” while another employee suggested that if the top-shared URL (according to the dashboard) was only shared 138 times in two days, then the tool was not really tracking “a massive influence operations.” Roth then replied that there is “no evidence to support the statement that the dashboard is a finger on the pulse of Russian information ops.”

Let’s unpack that.

To begin with, the metrics by which Twitter executives evaluated the dashboard — the degree to which the accounts it monitored were Russian and/or bots — are not aligned with the stated methods and purpose of Hamilton 68. The dashboard didn’t claim that all or even most accounts were located in Russia, nor that they were bots. The purpose of the dashboard was to track Russian influence in cyberspace (specifically, social media), which is a different activity than tracking Russian accounts. That’s because Russian influence is transmitted in a variety of forms through many different channels, including overt Russian propaganda from state-funded outlets like RT and Sputnik, as well as a variety of proxy actors and front organizations including think-tanks, NGO’s, western-facing websites, academics, media personalities, and more. Most of these vehicles of influence are not located in Russia, so the existence of non-Russian accounts on the list is not an indication that the dashboard wasn’t tracking Russian influence. Indeed, the entire purpose of influence operations like this is to influence the perceptions and decision-making of the target population. The fact that American, Canadian, and British accounts were sharing Russian propaganda and identifying with Russian viewpoints and ideas is not proof of Russian influence, but it supports the notion that Russia’s influence activities may have achieved at least some of their key goals.

This also explains why Taibbi’s assessment falls flat when he criticizes Hamilton 68 because it “barely had any Russians.” Hamilton 68 wasn’t a Russian account tracker — it was a Russian influence tracker, and in order to track Russian influence, you have to track the audience(s) they’re trying to influence.

Nearly every trend that was highlighted on Hamilton 68 could be verified by triangulating the dashboard data with other sources of evidence.

The comment from the unnamed Twitter employee about the size of the influence operation is perplexing. Hamilton 68 only tracked 600 accounts, so it didn’t purport to provide a view of all Russian influence activities nor did it claim to track the entirety of an influence operation. The idea is that these 600 accounts were likely a representative sample; thus, by studying them, it was possible to make inferences about Russian influence activities in the broader social media environment. If a URL was shared 138 times (as mentioned in the email referenced by Taibbi) in a sample of 600 accounts, this means that 23% of the entire sample shared that URL. That’s an extremely high degree of saturation and also an indicator of potential coordinated posting activity.

As for Roth’s assertion that there was “no evidence to support the statement that the dashboard is a finger on the pulse of Russian information ops,” this is simply false — and demonstrably so. Let’s take a closer look at that, because it also pertains to another key point that Taibbi either missed or excluded from his “exposé”: Nearly every trend that was highlighted on Hamilton 68 could be verified by triangulating the dashboard data with other sources of evidence.

The proof is in the propaganda

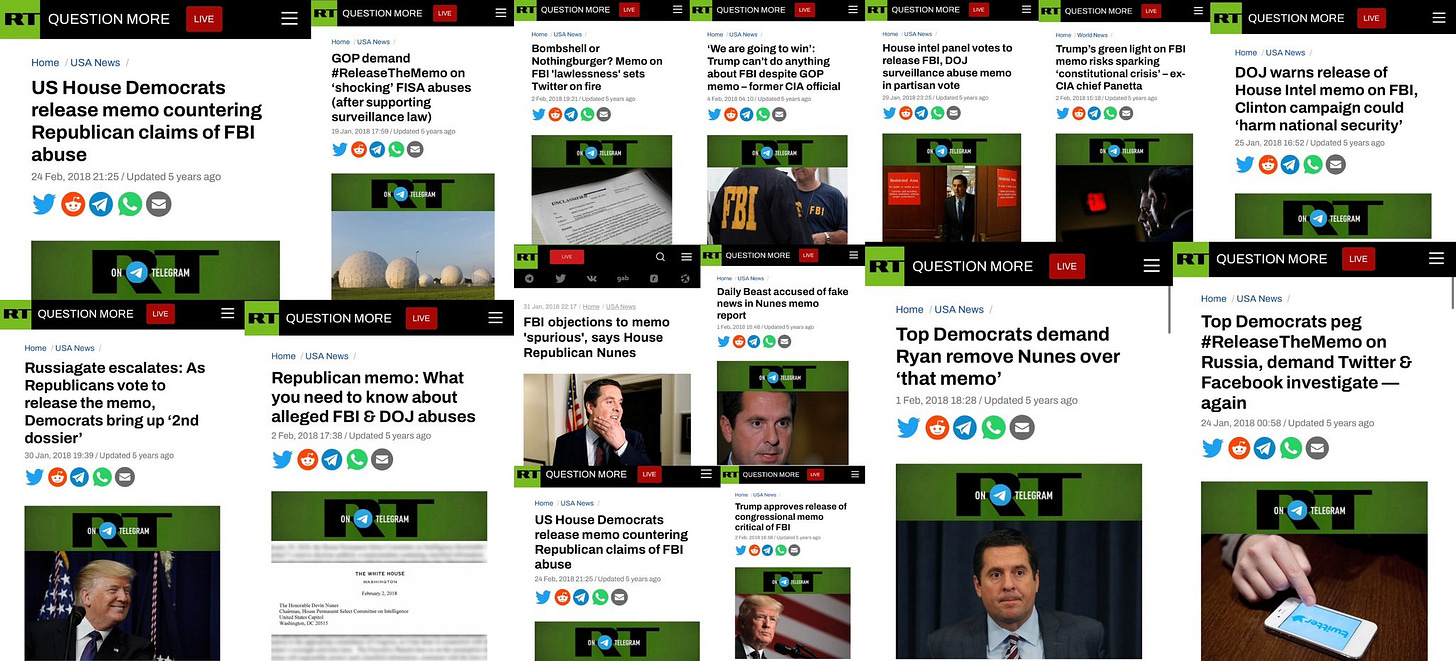

Consider the hashtag #ReleaseTheMemo, which Taibbi specifically mentioned in his thread as a false example of Russian influence that made it into media reports thanks to Hamilton 68. (In case you’ve forgotten, this incident centered around a memo written by GOP Rep. Devin Nunes’ congressional staff alleging the FBI used questionable and possibly illegal tactics during the FISA application for Trump campaign aide Carter Page. National security officials objected to its release, citing “grave concerns” about the accuracy of the memo, but congressional Republicans released it anyway on Feb. 2, 2018.)

As Taibbi noted, it was widely reported that accounts linked to Russian influence operations were involved in amplifying the hashtag “#ReleaseTheMemo,” which emerged on Jan. 19, 2018, as the 14th most popular hashtag worldwide. Some of this coverage erroneously reported that “Russian bots” were responsible for the hashtag, while other media outlets said it was being promoted by “Russian-linked Twitter accounts.”

Taibbi seems to imply that Hamilton 68 was the sole source for this claim. Thus, according to his logic, if Hamilton 68 is discredited or at least called into question, then the claim that #ReleaseTheMemo was amplified by accounts linked to Russian influence operations must also be dubious.

But Hamilton 68 wasn’t the only source.

Russian state-funded propaganda outlet RT published dozens of articles about the topic, including many that directly referenced “#ReleaseTheMemo.” To understand why that matters, you have to understand what RT is and how it is used by the Russian state.

RT is part of the overt, forward-facing layer of Russia’s propaganda ecosystem, and even its own editor-in-chief has described it as an “information weapon.” It doesn’t have editorial independence, and when RT devotes a significant amount of coverage to a single issue, it’s usually a relatively reliable indicator of a broader influence campaign. The U.S. Department of State describes RT and its fellow traveler Sputnik News as “key state-funded and directed global messengers…using the guise of conventional international media outlets to provide disinformation and propaganda support for the Kremlin’s foreign policy objectives.”

RT is part of the overt, forward-facing layer of Russia’s propaganda ecosystem, and even its own editor-in-chief has described it as an “information weapon.”

RT is not a lone actor and must be considered in the context of the propaganda ecosystem in which it exists. It is one stop along an assembly line where Russian disinformation is manufactured, published through official and unofficial Kremlin channels and proxy sources, laundered through social media and information middlemen (e.g., Russia-friendly academics, “experts,” and media figures who wittingly or unwittingly help obscure the source of Russian disinformation), and finally, integrated into mainstream news reports. Like any single-purpose machine, it is designed for use in conjunction with the other machines along the assembly line, all of which perform their designated tasks in pursuit of a common goal or outcome — the “product”. RT’s primary role in this ecosystem is to amplify content from Russian and Russian-aligned sources under the auspices of a news website, thereby creating the false appearance of credibility and legitimacy, and also localizing the context for the desired audience. RT — and to an even greater extent, Sputnik — have also been found to be involved in cyber operations connected to Russian intelligence, including through the weaponization of social media and the use of malware. Sputnik articles often link to proxy websites that are directly connected to Russian intelligence services, and has reportedly embedded malware in its Twitter posts designed to “manipulate additional web traffic of unwitting users in order to inorganically amplify stories…” Furthermore, RT and Sputnik also have a history of providing a platform for WikiLeaks and its founder Julian Assange — both key figures in Russia’s 2016 election interference campaign.

The fact that RT (and Sputnik) featured such extensive coverage of “#ReleaseTheMemo” and the events surrounding it is not a coincidence, but rather is a sign of a possible influence campaign. This publicly verifiable evidence corroborates the data published by the Hamilton 68 dashboard, which, notably, also showed that Wikileaks was the top domain shared alongside #ReleaseTheMemo in the network of 600 accounts it tracked.

And there’s still more evidence that corroborates the dashboard’s output. A search for “ReleaseTheMemo” on the Information Operations Archive website — a resource that compiles the datasets of suspected Russian influence accounts released by Twitter and Reddit — returned more than 5,000 results. Again, this is the data that Twitter itself released and labeled as Russian influence activity associated with the Internet Research Agency, and it corroborates the claim that accounts linked to Russian influence operations were boosting #ReleaseTheMemo.

Missing the forest for the (cherry-picked) trees

It’s true that there was some incredibly lazy and irresponsible reporting that cited Hamilton 68 as its source. This is a serious problem that I have pushed back against and tried to correct from the very start, because I care about information integrity and I also recognized this as a vulnerability that could be used in the future to discredit legitimate reporting on Russian influence:

And now here we are.

The misuse of the term “bots” and the eagerness with which many people accept claims about bots, botnets, “hate accounts,” and other online harms is a problem worth discussing. When disinformation became a buzzword a few years ago, we saw this field become overrun with scams, grifters, and tech startups looking to make a profit off people’s fear and anxiety by offering solutions that weren’t worth the paper their shoddy “network graphs” were printed on. Our standards for accepting claims about online harms from companies that profit from chasing online harms need to be higher. It’s also worth talking about how journalists who cover this area generally don’t have the technical expertise to independently verify the reports that tech companies offer up as “exclusives” in the hopes of getting free advertising for their company in the form of news coverage. Nor should they be expected to have this expertise. Rather, newsrooms should make sure their journalists are supported and have the resources they need to get independent verification before running with a misleading headline or, worse, a PR report or oppo research disguised as data science. There are even companies that actively manufacture toxic online networks, then offer up their services to “clean them up.” (That’ll be a future article).

But that’s not the conversation Taibbi is trying to start. Rather, Taibbi seems intent on discrediting Hamilton 68 as part of his broader campaign to cast the entire Russia investigation and the evidence of Russian interference as a hoax. His thread is riddled with misrepresentations and tainted by bias, and most of the “proof” he offers up doesn’t actually prove much about Hamilton 68 at all; rather, it points to sloppy reporting (like the tendency to misuse the word “bots”, which, ironically, Taibbi is just as guilty of as the news organizations he lambasted in his thread) and a Twitter team that fundamentally misunderstood the nature of modern asymmetric information warfare and malign influence campaigns.

In one tweet, Taibbi blamed Hamilton 68 for news reports claiming that Russian-linked accounts were boosting Tulsi Gabbard during the 2020 Democratic Primaries. Yet, Hamilton 68 ceased to exist after 2018. So how could it be responsible for news reports that were published two years later?

In another tweet, Taibbi claimed that Hamilton 68 had “collected a handful of mostly real, mostly American accounts, and described their organic conversations as Russian scheming.” This is just patently false. The dashboard never made any claims about intentionality or witting participation, and it certainly never encouraged anyone to interpret its findings as evidence of “Russian scheming.” Furthermore, “organic conversations” can still be the result of Russian influence. In fact, a bunch of Americans organically expressing viewpoints that align closely with Russian propaganda narratives is exactly what a successful influence operation might look like.

In an effort to prove that the dashboard wasn’t really monitoring trends in Russian propaganda, Taibbi also interviewed a handful of people who he identified as the owners of accounts that were reportedly among the 600 monitored accounts. (It’s not clear if or how Taibbi verified that he had the correct list of 600 accounts. Yoel Roth indicated that he had “reverse engineered” the list at one point, but to my knowledge, Securing Democracy has not released the list they used for the dashboard. Therefore, it seems possible that Taibbi’s entire exposé could be based on a faulty list of accounts). In Taibbi’s interviews with the account owners, we once again saw no attempt to engage with the actual facts of the matter, but rather more denials of accusations that no one ever made.

There are valid critiques of Hamilton 68. It’s far from a perfect tool and it was misused and misinterpreted by a lot of major news organizations in ways that really shouldn’t have happened. But that’s still happening, with other dashboards and tools being misrepresented in media reports that prioritize the narrative over the evidence supporting it. Maybe that’ll be in Taibbi’s next exposé, but I doubt it. He, too, is chasing a narrative, just like the people he accuses of being “frauds.”

Outstanding summary, thanks.

I don't understand why so many people like MT have gone off the rails trying to disprove something that is not only real, but of increasing concern to everyone in this country: active foreign influence operations. As bad as it's been, generative AI is going to make the problem a lot worse.

Beautifully written article in response to Matt Taibbi. Once an MT fan, I am completely deflated from his Twitter writings, but wasn’t sure how to put my suspicions away. now, with the help of your article I can see this clearly. From my point of view, you just turned the tables on what Matt tries to do in many of his previous writings. Good for you! I sincerely hope that you consider writing further regarding the foreign influence operations that you outlined. Please consider recent information such as:

”On 23 January, we learned that a former FBI special agent, Charles McGonigal, was arrested on charges involving taking money to serve foreign interests. One accusation is that in 2017 he took $225,000 from a foreign actor while in charge of counterintelligence at the FBI's New York office. Another charge is that McGonigal took money from Oleg Deripaska, a sanctioned Russian oligarch, after McGonigal’s 2018 retirement from the FBI. Deripaska, a hugely wealthy metals tycoon close to the Kremlin, "Putin's favorite industrialist," was a figure in a Russian influence operation that McGonigal had investigated in 2016. Deripaska has been under American sanctions since 2018. Deripaska is also the former employer, and the creditor, of Trump's 2016 campaign manager, Paul Manafort.” -from the bulwark.

Best wishes.