The Anatomy of a Viral Tweet: Rage Farming Edition

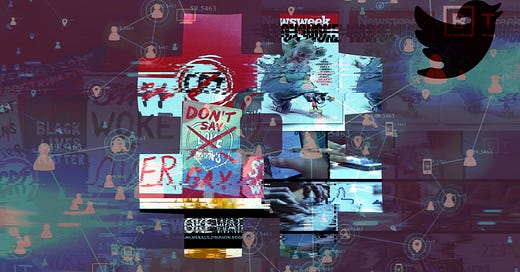

How Internet outrage is helping racists monetize hate.

As social media platforms become increasingly reliant on algorithms for, well, everything, bad actors and scam artists are finding it easier than ever to game the system with tactics that exploit basic human vulnerabilities to boost engagement with their content, creating a method of monetizing racism, sexism, and hate that requires little more than recycled content and an audience willing to engage with it.

These cheap tactics take several forms, but today, in the latest edition of “Anatomy of a Viral Tweet”, we’re going to look at a strategy that I’ll call rage farming, which is a combination of outrage bait and engagement farming. Rage farming involves posting content that is intentionally provocative and meant to stoke outrage — usually among one’s political adversaries, but sometimes among anyone who sees it — and it can be found on pretty much every major social media site.

Rage Farming 101

One of the most recent and glaring examples of this strategy in action is the weird AI/Aryan family mashup photo that went viral over the weekend and is, at least according to this guy, “Woke’s worst nightmare.”

Before we even look at the account, let’s break down the features of the tweet that are characteristic of rage farming. First of all, the entire premise of the tweet is based on a manufactured brand of outrage that the right has managed to turn into a rallying cry — a way to frame their opposition to progress and social justice and, well, the entire idea of civil rights in general, as some sort of honorable fight to save our children from having to learn about slavery or really anything at all. In the “War on Woke,” anything from children’s books to M&M’s to Target’s inventory can be the next outrage bait used to whip willing participants into a frenzy simply by attaching the word “woke” to it. It’s shamefully simple.

That’s the ploy this account is using to turn a bunch of dead-eyed AI children into content that nearly 20 million people have seen and more than 15,000 have engaged with. Culture war issues tend to produce reliably viral content, especially when they’re framed as a way for cultural warriors to signal which side of the battle they’re on. When content is positioned as ammo to use against the other side, it becomes nearly irresistible bait.

There are also some interesting psychological dynamics at play here, most of which have to do with group affiliation, in-group signaling, and performative outrage meant to serve as an observable show of loyalty and commitment to one’s own side of the war. Research shows that we’re actually quite bad at accurately perceiving true levels of outrage online, but we’re very good at modeling the behaviors of those in our social group. So, when other people are performing outrage, we tend to join in — whether or not we actually feel a sense of outrage or moral responsibility to respond to the content or behavior that sparked the spiral of reactive engagement. This is due to a combination of both reinforcement learning due to the positive feedback one receives for participating in displays of outrage, as well as normative learning, in which users develop outrage expressions that conform to those of their social network.

The anatomy of a viral tweet: The "rehashing old news" variant

I recently asked my Twitter followers if they’d be interested in reading a breakdown of why certain tweets go viral, and the answer was a resounding “YES!” So today, I’m introducing the first piece in a series that will answer the question, “Why did that tweet go viral?”

The second tactic this post deploys is what I call a “positional strawman”: It presents a point of view that no one actually holds, and pretends that it represents the viewpoint of a broad swath of their adversaries. In this case, the poster would like people to believe that most members of the “woke mob” — that is, anyone to the left of today’s Republican Party — are horrified by the idea of white people with blonde hair and blue eyes having children. I don’t know a single person who would agree with that point of view, yet it’s presented here as a given — as if it’s such a commonly-held viewpoint that we don’t even need to question whether or not it’s actually true at all. In reality, this is likely projection on the part of the poster. When you’re deeply troubled by the idea of non-white people having children and becoming more widely represented in the population, then it’s easy to assume that people on the other side of this argument feel the same about white people. But for the most part, they don’t. And that’s the difference between these two “sides” — one side just wants to be able to live like everyone else and not be discriminated against, regardless of their skin color, or hair color, or eye color, etc, while the other side believes that people who don’t look like them are a threat and should be treated as such.

Besides revealing their own “anti-woke worst nightmare”, the positional strawman offered in the tweet elicits engagement from people who see their viewpoint being wildly misrepresented, and feel the need to say so. This is, of course, completely understandable, but it’s also a cheap trick to get you to engage.

Thanks to the toxic effects of algorithmic amplification, negative engagement counts just as much as positive engagement…

Choosing race as the issue to focus on should come as no surprise, given that race has been and remains among the most contentious and divisive issues in American society, and it takes very little effort to effectively weaponize race-related content for engagement. And to be clear, while both “sides” may not be the same or even roughly equivalent (they’re not), the reason this tactic works is that both sides are willing to engage with bad-faith posts and outrage bait. And the reason both sides are willing to engage with this content is that rage farming promises benefits for those who put out the bait as well as those who take the bait.

For those who put out the bait, maximizing engagement is the whole game. Engagement tends to fuel further engagement, as each new retweet, quote-tweet, like, or comment has the potential to place your content in front of a new audience, where the cycle starts all over again. There are documented contagion-like effects of outrage, so the more it spreads, the more it’s likely to spread.

Furthermore, thanks to the toxic effects of algorithmic amplification, negative engagement counts just as much as positive engagement — so when you engage with outrage bait, even if it’s just to condemn it or express your opposition to what it says, you’re training an algorithm to serve up more of that content. Think of it like training a personal assistant to learn your priorities based on the division of your time and attention. If your personal assistant sees that you consistently spend time on and devote your attention to racist memes and other outrage bait, they’re going to assume that these are priorities, and add more of them to your daily schedule. That’s essentially what is happening with the algorithm — it’s not just serving up content randomly, but rather it’s taking your own input into the system and delivering output based on that.

And thanks to the new monetization scheme on Twitter — pardon me, “X” — it’s now easier than ever to make a profit with the type of rage farming this account is engaging in.

Any platform with a recommendation algorithm can be exploited with tactics like this, though some platforms, like TikTok, are particularly susceptible because of the medium. Videos typically elicit strong emotional responses, and people who have strong affective responses to videos are more likely to share them. Images, too, have been shown to be particularly emotionally salient, and may contribute to the spread of mis/disinformation due to the emotions they elicit in people who view them.

Networked Rage Farming

Taking a look at the account itself gives us a more complete picture of what’s going on. The header image for the account is a picture of Yuri Bezmenov, a KGB defector whose warnings about the effects of disinformation — namely, that Western democracies and their citizens were being quietly eroded from within by a secret Soviet disinformation operation — have made him a posthumously notorious figure among those who study disinformation, but far more so among those who practice it.

There was a time, several years ago, when putting references to being located in Russia or being affiliated with the infamous troll factory was a favorite troll tactic because it made liberals panic. It worked for much longer than it should have. This reminds me of that.

The account is embedded in a network of racist trolls and other intentionally offensive meme accounts, some of which look strikingly similar — sort of like what it would look like if it were one of many branded accounts, each targeted to a slightly different audience. Microtargeting is, of course, a well known strategy used in marketing and political advertising — and it’s also a well known national security risk. Whether or not that’s what is going on here isn’t clear, but what is clear is that this cheap set of tricks is embarrassingly effective and more incentivized than ever, now that it can be directly monetized on Twitter. The accounts in this network appear to work in tandem, whether it’s explicitly coordinated or just the product of network effects. They all post similar graphics and meme content, all have faded cartoon-like avatars, and many have handles that essentially advertise the account as being a single-purpose node in a larger network. They all retweet each other’s content and share a similar sense of “humor” that seems explicitly designed to push people’s buttons and get a reaction.

Unfortunately, outrage is a renewable resource and there exists a seemingly endless supply of outrage merchants who are more than willing to commodify it and cash in on your clicks. The only way to stop this cycle is to stop feeding into it with your engagement, but that’s easier said than done. For one thing, we’re all humans, and humans aren’t exactly known for being consistently rational, particularly in emotionally-charged contexts. There’s also evidence that the sociotechnical architecture of social media is actually producing more outrage, not simply giving us more opportunities to express it. Furthermore, there’s an incentive to participate in online outrage demonstrations. Doing so is often rewarded by members of one’s social network, leading to other problematic practices — like performative allyship — and fueling the rage cycle that keeps Fox News in business and produces a spiral of destructive purity tests and social policing on the left.

Rage farming is, at best, a harmful form of grifting that exploits human vulnerabilities and games the algorithms that shape our online experiences to make money by pissing off as many people as possible. A darker take on this phenomenon is that it’s a pathological, antisocial form of trolling that is performed by people who revel in chaos and enjoy causing social dysfunction and discord, and are willing to leverage racism, sexism, and any other type of bigotry that can be turned into outrage. This is similar to a concept that I call “informational nihilism,” which I use to describe how the far-right seems to believe in nothing and everything, often at the same time. Nothing has inherent value to them, and things like information and communication only exist in their world as a weapon, not a tool for genuine dialogue or engagement. But don’t take that to mean that this is only a problem that exists on one end of the political spectrum. Rage farming is an engagement game based on inciting one’s ideological opponents, and the left has proven themselves to be willing participants in this toxic exchange. If you really want to express your disgust towards outrage merchants like this, the best thing you could do is absolutely nothing. After all, your engagement is their currency — and when that dries up, the cycle of outrage will abruptly run out of gas.

Just curious... is active "performative allyship" to boost SEO of an issue/writer/topic that one actively supports wrong? I've been up against so much MAGA/troll farm/AI drivel (such as your illustration), which I ignore or mute. I no longer respond, having ceased arguments long ago (cue G.B.S. opining about pig wrestling). However when I "like" a tweet/thread/post, I sometimes see its reach extended in real time, so I persist to engage accounts I support or admire, regularly giving a "like" just to have it shown to more humans. Of course, I tell myself that MY causes are just, my comment standards high, and my sense of humor irrepressible. As for farming for clicks, well let's face it, content providers deserve to be paid. And as MAGA conveniently forgets, clicks are not actually votes.

BTW Love your columns!!

First time I’m using Notes and I can’t favorite this one. Seems to work on others. Maybe I have subscription deficit disorder.